![]()

语音与音频技术实验室

语音与音频技术实验室

论文推荐

Improving automatic speech recognition performance for low-resource languages with self-supervised models

- DOI码:

- 10.1109/JSTSP.2022.3184480

- 发表刊物:

- IEEE Journal of Selected Topics in Signal Processing

- 摘要:

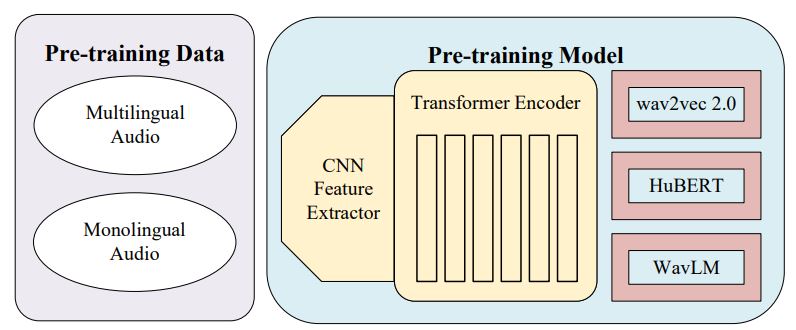

- Speech self-supervised learning has attracted much attention due to its promising performance in multiple downstream tasks, and has become a new growth engine for speech recognition in low-resource languages. In this paper, we exploit and analyze a series of wav2vec pre-trained models for speech recognition in 15 low-resource languages in the OpenASR21 Challenge. The investigation covers two important variables during pre-training, three fine-tuning methods, as well as applications in End-to-End and hybrid systems. First, pre-trained models with different pre-training audio data and architectures (wav2vec2.0, HuBERT and WavLM) are explored for their speech recognition performance in low-resource languages. Second, we investigate data utilization, multilingual learning, and the use of a phoneme-level recognition task in fine-tuning. Furthermore, we explore what effect fine-tuning has on the similarity of representations extracted from different transformer layers. The similarity analyses cover different pre-trained architectures and fine-tuning languages. We apply pre-trained representations to End-to-End and hybrid systems to confirm our representation analyses, which have obtained better performances as well.

- 第一作者:

- Jing Zhao

- 论文类型:

- 期刊论文

- 通讯作者:

- Wei-Qiang Zhang

- 是否译文:

- 否

- 发表时间:

- 2022-06-20

- 发布期刊链接:

- https://ieeexplore.ieee.org/document/9801640