Unstructured pruning and low rank factorisation of self-supervised pre-trained speech models

Release time: 2024/09/28

Hits:

- DOI number:

- 10.1109/JSTSP.2024.3433616

- Journal:

- IEEE Journal of Selected Topics in Signal Processing

- Abstract:

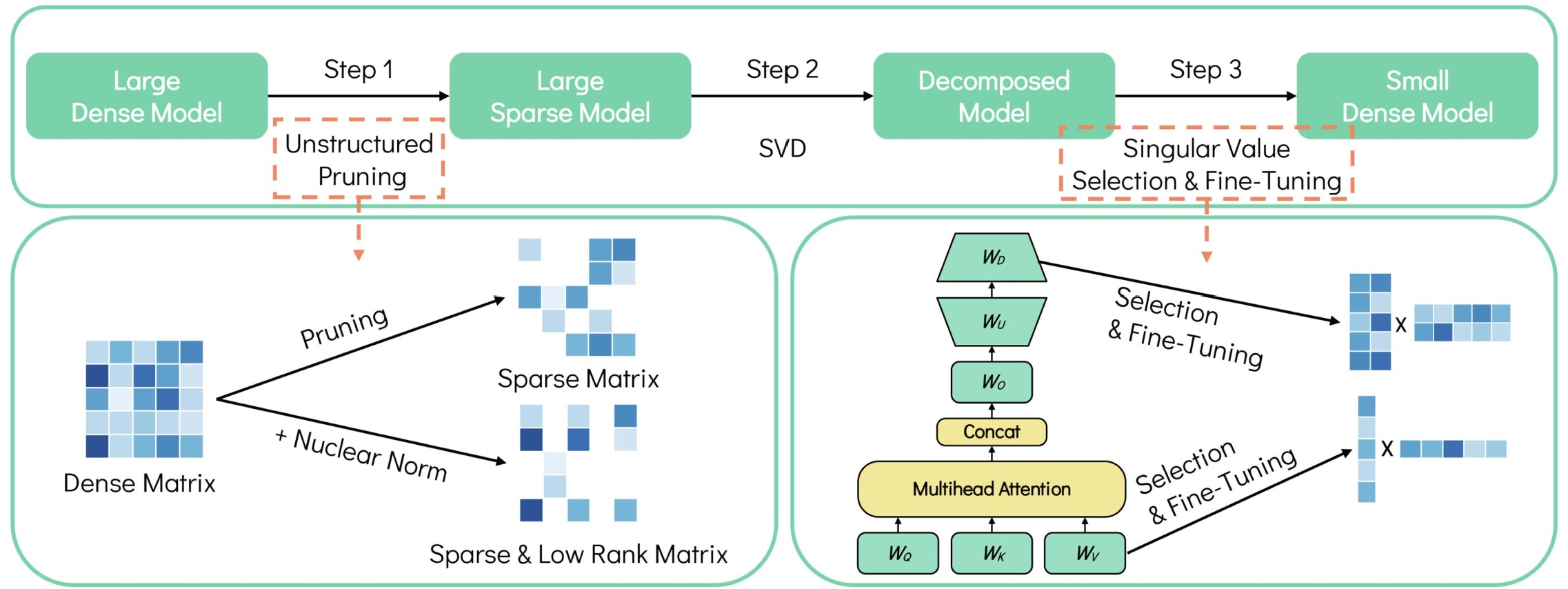

- Self-supervised pre-trained speech models require significant memory and computational resources, limiting their applicability to many speech tasks. Unstructured pruning is a compression method that can achieve minimal performance degradation, while the resulting sparse matrix mandates special hardware or computational operators for acceleration. In this study, we propose a novel approach that leverages the potential low-rank structures of the unstructured sparse matrices by applying truncated singular value decomposition (SVD), thus converting them into parameter-efficient dense models. Moreover, we introduce nuclear norm regularisation to ensure lower rank and a learnable singular value selection strategy to determine the approximate truncation rate for each matrix. Experiments on multiple speech tasks demonstrate that the proposed method can convert an unstructured sparse model into a light-weight and hardware-friendly dense model with comparable or superior performance.

- First Author:

- Haoyu Wang

- Indexed by:

- Journal paper

- Correspondence Author:

- Wei-Qiang Zhang

- Translation or Not:

- no

- Date of Publication:

- 2024/07/25

- Links to published journals:

- https://ieeexplore.ieee.org/document/10609479